Applied Deep Learning with TensorFlow 2: Learn to Implement Advanced Deep Learning Techniques with Python

商品資訊

ISBN13:9781484280195

出版社:Apress

作者:Umberto Michelucci

出版日:2022/04/12

裝訂:平裝

規格:25.4cm*17.8cm*2.1cm (高/寬/厚)

商品簡介

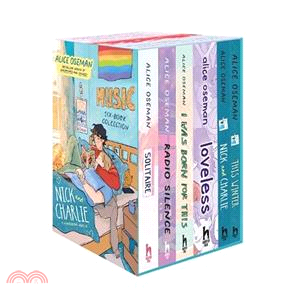

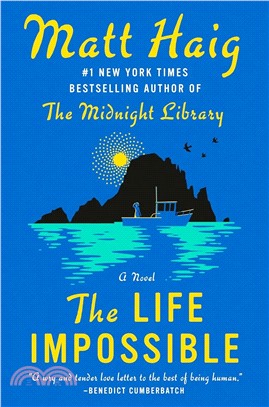

相關商品

商品簡介

Chapter 1: Optimization and neural networks

Subtopics: How to read the book Introduction to the book

Chapter 2: Hands-on with One Single NeuronSubtopics: Overview of optimization A definition of learning Constrained vs. unconstrained optimization Absolute and local minima Optimization algorithms with focus on Gradient Descent Variations of Gradient Descent (mini-batch and stochastic) How to choose the right mini-batch size

Chapter 3: Feed Forward Neural NetworksSubtopics: A short introduction to matrix algebra Activation functions (identity, sigmoid, tanh, swish, etc.) Implementation of one neuron in Keras Linear regression with one neuron Logistic regression with one neuron

Chapter 4: RegularizationSubtopics: Matrix formalism Softmax activation function Overfitting and bias-variance discussion How to implement a fully conneted network with Keras Multi-class classification with the Zalando dataset in Keras Gradient descent variation in practice with a real dataset Weight initialization How to compare the complexity of neural networks How to estimate memory used by neural networks in Keras

Chapter 5: Advanced OptimizersSubtopics: An introduction to regularization l_p norm l_2 regularization Weight decay when using regularization Dropout Early Stopping Chapter 6Chapter Title: Hyper-Parameter tuningSubtopics: Exponentially weighted averages Momentum RMSProp Adam Comparison of optimizers

Chapter 7Chapter Title: Convolutional Neural NetworksSubtopics: Introduction to Hyper-parameter tuning Black box optimization Grid Search Random Search Coarse to fine optimization Sampling on logarithmic scale Bayesian optimisation

Chapter 8Chapter Title: Brief Introduction to Recurrent Neural NetworksSubtopics: Theory of convolution Pooling and padding Building blocks of a CNN Implementation of a CNN with Keras Introduction to recurrent neural networks Implementation of a RNN with Keras Chapter 9: AutoencodersSubtopics: Feed Forward Autoencoders Loss function in autoencoders Reconstruction error Application of autoencoders: dimensionality reduction Application of autoencoders: Classification with latent features Curse of dimensionality Denoising autoencoders Autoencoders with CNN

Chapter 10: Metric AnalysisSubtopics: Human level performance and Bayes error Bias Metric analysis diagram Training set overfitting How to split your dataset Unbalanced dataset: what can happen K-fold cross validation Manual metric analysis: an example

Chapter 11 Chapter Title: General Adversarial Networks (GANs)Subtopics: Introduction to GANs The building blocks of GANs An example of implementation of GANs in Keras

APPENDIX 1: Introduction to KerasSubtopics: Sequential model Keras Layers Funct

Subtopics: How to read the book Introduction to the book

Chapter 2: Hands-on with One Single NeuronSubtopics: Overview of optimization A definition of learning Constrained vs. unconstrained optimization Absolute and local minima Optimization algorithms with focus on Gradient Descent Variations of Gradient Descent (mini-batch and stochastic) How to choose the right mini-batch size

Chapter 3: Feed Forward Neural NetworksSubtopics: A short introduction to matrix algebra Activation functions (identity, sigmoid, tanh, swish, etc.) Implementation of one neuron in Keras Linear regression with one neuron Logistic regression with one neuron

Chapter 4: RegularizationSubtopics: Matrix formalism Softmax activation function Overfitting and bias-variance discussion How to implement a fully conneted network with Keras Multi-class classification with the Zalando dataset in Keras Gradient descent variation in practice with a real dataset Weight initialization How to compare the complexity of neural networks How to estimate memory used by neural networks in Keras

Chapter 5: Advanced OptimizersSubtopics: An introduction to regularization l_p norm l_2 regularization Weight decay when using regularization Dropout Early Stopping Chapter 6Chapter Title: Hyper-Parameter tuningSubtopics: Exponentially weighted averages Momentum RMSProp Adam Comparison of optimizers

Chapter 7Chapter Title: Convolutional Neural NetworksSubtopics: Introduction to Hyper-parameter tuning Black box optimization Grid Search Random Search Coarse to fine optimization Sampling on logarithmic scale Bayesian optimisation

Chapter 8Chapter Title: Brief Introduction to Recurrent Neural NetworksSubtopics: Theory of convolution Pooling and padding Building blocks of a CNN Implementation of a CNN with Keras Introduction to recurrent neural networks Implementation of a RNN with Keras Chapter 9: AutoencodersSubtopics: Feed Forward Autoencoders Loss function in autoencoders Reconstruction error Application of autoencoders: dimensionality reduction Application of autoencoders: Classification with latent features Curse of dimensionality Denoising autoencoders Autoencoders with CNN

Chapter 10: Metric AnalysisSubtopics: Human level performance and Bayes error Bias Metric analysis diagram Training set overfitting How to split your dataset Unbalanced dataset: what can happen K-fold cross validation Manual metric analysis: an example

Chapter 11 Chapter Title: General Adversarial Networks (GANs)Subtopics: Introduction to GANs The building blocks of GANs An example of implementation of GANs in Keras

APPENDIX 1: Introduction to KerasSubtopics: Sequential model Keras Layers Funct

主題書展

更多

主題書展

更多書展今日66折

您曾經瀏覽過的商品

購物須知

外文書商品之書封,為出版社提供之樣本。實際出貨商品,以出版社所提供之現有版本為主。部份書籍,因出版社供應狀況特殊,匯率將依實際狀況做調整。

無庫存之商品,在您完成訂單程序之後,將以空運的方式為你下單調貨。為了縮短等待的時間,建議您將外文書與其他商品分開下單,以獲得最快的取貨速度,平均調貨時間為1~2個月。

為了保護您的權益,「三民網路書店」提供會員七日商品鑑賞期(收到商品為起始日)。

若要辦理退貨,請在商品鑑賞期內寄回,且商品必須是全新狀態與完整包裝(商品、附件、發票、隨貨贈品等)否則恕不接受退貨。